Activity: Color Shapes#

Documentation for tasks.color_shapes.py, part of the Tasks utilities in the M2C2 DataKit package.

Module Summary#

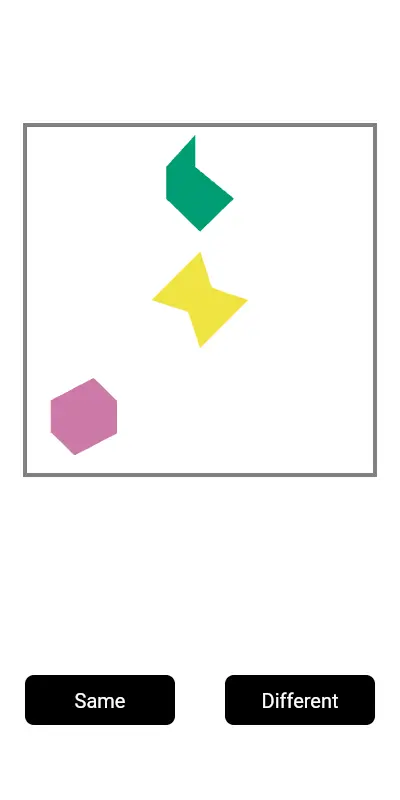

This module contains functions to score and summarize the Color Shapes task data. The Color Shapes task is a visual change detection paradigm that assesses Associative Visual Working Memory and Visual Memory 'Binding'. Participants view arrays of colored shapes and must determine whether the color-shape combinations are identical ("same") or different ("different") between study and test arrays.

The task measures signal detection performance through four response categories: hits (correctly identifying different arrays), misses (failing to detect differences), false alarms (incorrectly reporting differences in identical arrays), and correct rejections (correctly identifying identical arrays). The module calculates key metrics including accuracy rates and response times.

Key features of this module include:

- Signal detection scoring: Classifies responses into HIT, MISS, FA (False Alarm), and CR (Correct Rejection) categories

- Response time analysis: Computes response time statistics with outlier filtering

- Data quality tracking: Monitors invalid responses and filtered trials

- Comprehensive summary statistics: Generates participant-level metrics for accuracy, response time, and signal detection rates

Citations#

Please visit https://m2c2-project.github.io/m2c2kit-docs/ for task citations

Public API#

color_shapes

#

score_accuracy(row)

#

Scores the accuracy of the response for a single trial in the Color Shapes task.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

row

|

Series

|

A single row of the trial-level Color Shapes task dataframe. |

required |

Returns:

| Type | Description |

|---|---|

str | None

|

A string indicating the accuracy of the response, such as "CR" for Correct Rejection, "MISS", "HIT", or "FA" for False Alarm, based on the scoring criteria. None is returned if an error occurs while processing the row. |

Source code in m2c2_datakit/tasks/color_shapes.py

68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 | |

score_shapes(row, method='accuracy')

#

Score a single row of a color shapes task dataframe based on the method given.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

row

|

Series

|

A single row of a color shapes task dataframe containing the columns "user_response" and "user_response_correct". |

required |

method

|

str

|

The scoring method to use. Defaults to "accuracy". Can be "accuracy" or "signal". |

'accuracy'

|

Returns:

| Type | Description |

|---|---|

str | None

|

If method is "accuracy", returns "CR", "MISS", "HIT", or "FA". If method is "signal", returns "SAME" or "DIFFERENT". Otherwise, returns None. |

Raises:

| Type | Description |

|---|---|

Exception

|

If an error occurs while processing the row. |

Source code in m2c2_datakit/tasks/color_shapes.py

7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 | |

score_signal(row)

#

Scores the signal type of the response for a single trial in the Color Shapes task.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

row

|

Series

|

A single row of the trial-level Color Shapes task dataframe. |

required |

Returns:

| Type | Description |

|---|---|

str | None

|

A string indicating the signal type of the response, either "SAME" or "DIFFERENT", based on the scoring criteria. None is returned if an error occurs while processing the row. |

Source code in m2c2_datakit/tasks/color_shapes.py

85 86 87 88 89 90 91 92 93 94 95 96 97 98 | |

summarize(x, trials_expected=10, rt_outlier_low=100, rt_outlier_high=10000)

#

Summarizes the Color Shapes task data by calculating various statistics.

This function calculates the number of hits, misses, false alarms, and correct rejections based on the accuracy of responses in the Color Shapes task. It computes signal detection rates and evaluates response times, filtering out null, invalid, and outlier values.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

x

|

DataFrame

|

The trial-level scored dataset for the Color Shapes task. |

required |

trials_expected

|

int

|

The expected number of trials. Defaults to 10. |

10

|

rt_outlier_low

|

int

|

The lower bound for filtering outliers in response times. Defaults to 100. |

100

|

rt_outlier_high

|

int

|

The upper bound for filtering outliers in response times. Defaults to 10000. |

10000

|

Returns:

| Type | Description |

|---|---|

Series

|

A Pandas Series containing the summary statistics for the Color Shapes task. |

Source code in m2c2_datakit/tasks/color_shapes.py

101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 | |